Importing files using the native API

Background

The native API of M-Files is pretty powerful - everything you can achieve by using the admin client or the client tools you can do programmatically using the API.

It's not available as a separate download, but is installed when you install M-Files.

Requirements

Creating a new object

Inserting data into an existing M-Files Vault is pretty straightforward. To Create a new object instance, you need 4 things that can easily be done using the API:

- The Id of the ObjectType you want to insert

- A list of all properties that are required

- A working connection to a vault

- Optional: Source Files (= ordinary files from the filesystem) that will be attached

1. and 2. are dependent on the metadata of the vault, whereas 3. and 4. work the same for every object or vault.

An intimate knowledge of the metadata structure

As mentioned above, inserting a new object requires knowledge about the structure of the metadata. For example, if you want to create a document instance and the class you are trying to create has a required Date property, then you need to add a property named Date of type Date so that those requirements are fulfilled when you create a new document instance.

This tightly couples creating instances with your metadata structure - if for example you decided to rename a property of your document, you need to update your code to mirror that change.

In my case, which I argue is probably the default, classes and properties are not set it stone and will evolve in the engagement, so I thought about coming up with a better solution.

Making it flexible

Now the thing is, the API supports accessing the metadata as well - this means that you can enumerate object types, classes and their properties and you can find out what DataType backs a specific property and you can also enumerate value lists.

With this in mind, it's possible to decouple the insertion process from the specifics of the structure, as the structure can be reflected upon at insertion time.

This not only generalizes the code doing the insertion, it also decouples it from knowing anything about the metadata in advance and therefore makes reuse possible.

Making it transparent

When you're inserting a ton of files, you'll need reporting - you need to know which files were tried to import and which of those succeeded and which of those did not.

Further, for those files who failed to import, you'd want an error message, that tells you want went wrong so that you can go back and fix it.

Iterative approach

As the next thing, you'd want to fix those errors quickly and retry the process, skipping those files that did already import successful (so to not create duplicates), but retry those that failed before and update their status again.

Fixing those errors should be made easy, as well as repeating the process, so that you are able to make progress quickly.

The Solution

Putting it all together

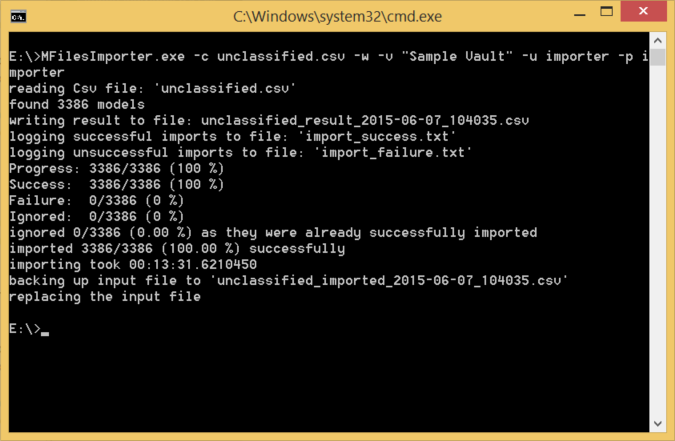

For those reasons I chose to write a Csv Importer that is able to insert new object instances by reading in a Comma separated file.

The first row holds the column headers. The column headers consist of the object type, class name and all property names. Each following row represents an item that will be inserted.

The insertion process adds two additional columns - the first holding the Id of a successfully imported item, the second holds the import status, that can either be success or an error message. A backup of the input file is saved before insertion takes place, so that those files can be compared for a granular analysis.

The importer itself is implemented as a console application, which makes automating and repeating the process very easy. For example hitting the up arrow key and pressing Enter.

With this solution we achieve the following goals:

-

Flexible

The importer can be reused across vaults and you can use any kind of tool to create the csv file.

-

Transparent

The csv file is updated with the results - you can clearly see what worked and what did not.

-

Iterative

You can easily update the data with excel / calc and start the import again.

-

Automatable

The commandline application lends itself to automation without any user interaction.

Editing the Csv File

Viewing and editing the Csv is easy as there are a couple of editors out there.

For example Microsoft Excel or the free LibreOffice Calc are well known tools that make navigating and updating the Csv easy as you can filter for Error or Success and bulk edit similiar rows.

Additional Note about performance

Generalized and flexible code is usually not as performant as code that can take advantage of special cases. In the software development process, performance is often the stepchild - first comes correctness and that's that. In this case performance was an important factor, considering the sheer amount of files that are being inserted. If you use too much precious time inserting each file, then those seconds really do add up!

Luckily, most of the time lost in the insertion process proofed to be lookups - lookups of text strings to find their corresponding object in the vault and use it's Id as a property value. By caching those values significant performance improvements could be reached. The drawback being that changes in the metadata won't be picked up mid-insertion.

Update: Official M-Files Csv Import Tool

In the meantime M-Files has published an Csv-Importer themselves, who according to their readme acts in a similiar way, except that it's an GUI application, which comes with different tradeoffs and they don't seem to update the csv, they just create an error output and a license that does not allow redistribution of the tool.

Update II: Sourcecode for MFilesImporter available

I finally had the time to push some code to github! If you're brave, head over to the github repo and take a look.

References

- Commma Separated Values (https://en.wikipedia.org/wiki/Comma-separated_values)

- Libre Office Calc (https://www.libreoffice.org/discover/calc/)

- Github Repo (https://github.com/8/MFilesImporter)

If you have a question feel free to email me or write a comment and thank you for reading!

Take care,

Martin